Microsoft Machine Learning Kit for Lobe with Raspberry Pi 4 4GB

All prices are VAT included

Machine learning is a transformative tool that's redefining how we build software—but up until now, it was only accessible to a small group of experts. At Adafruit, we think machine learning should be accessible to everyone, that's why today we are partnering with Lobe to bring you an easy to use machine learning kit, so you can bring your machine learning ideas to life.

This kit includes all the parts you need, at a discount!

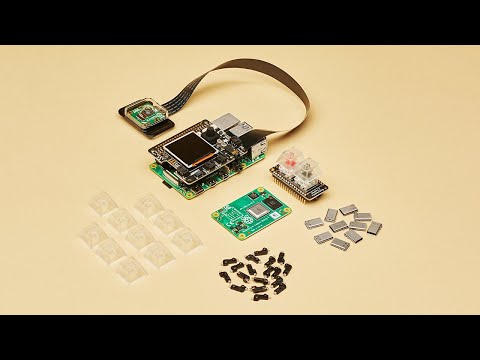

Kit includes:

- Adafruit BrainCraft HAT - Machine Learning for Raspberry Pi 4

- Raspberry Pi 4 Model B - 4 GB RAM

- Raspberry Pi Camera Board v2 - 8 Megapixels

- Adafruit Raspberry Pi Camera Board Case with 1/4" Tripod Mount

- Official Raspberry Pi Power Supply 5.1V 3A with USB C - 1.5 meter long

- Flex Cable for Raspberry Pi Camera or Display - 300mm / 12"

- 8GB SD/MicroSD Memory Card

To create the machine learning models, we'll use Lobe , an easy to use app that helps you train machine learning models for free. To use it, we'll just need to show Lobe some examples of what we want it to learn, and Lobe will train us a custom machine learning model for us. After that, we'll be able to use our model directly in the Raspberry Pi, or inside of Lobe itself.

No soldering is required to use this kit. Plug the parts together and you can begin your machine learning journey within the hour!

More about the BrainCraft HAT

The idea behind the BrainCraft HAT is that you'd be able to “craft brains” for Machine Learning on the EDGE, with Microcontrollers & Microcomputers. On ASK AN ENGINEER, our founder & engineer chatted with Pete Warden, the technical lead of the mobile, embedded TensorFlow Group on Google's Brain team about what would be ideal for a board like this.

And here's what we designed! The BrainCraft HAT has a 240×240 TFT IPS display for inference output, slots for camera connector cable for imaging projects, a 5-way joystick, button for UI input, left and right microphones, stereo headphone out, stereo 1 W speaker out, three RGB DotStar LEDs, two 3 pin STEMMA connectors on PWM pins so they can drive NeoPixels or servos, and Grove/STEMMA/Qwiic I2C port. This will let people build a wide range of audio/video AI projects while also allowing easy plug-in of sensors and robotics!

A controllable mini fan attaches to the bottom and can be used to keep your Pi cool while doing intense AI inference calculations. Most importantly, there's an On/Off switch that will completely disable the audio codec, so that when it's off there's no way it's listening to you.

Features:

- 1.54" IPS TFT display with 240x240 resolution that can show text or video

- Stereo speaker ports for audio playback - either text-to-speech, alerts, or for creating a voice assistant.

- Stereo headphone out for audio playback through a stereo system, headphones, or powered speakers.

- Stereo microphone input - perfect for making your very own smart home assistants

- Two 3-pin JST STEMMA connectors that can be used to connect more buttons , a relay , or even some NeoPixels!

- STEMMA QT plug-and-play I2C port , can be used with any of our 50+ I2C STEMMA QT boards , or can be used to connect to Grove I2C devices with an adapter cable .

- 5-Way Joystick + Button for user interface and control.

- Three RGB DotStar LEDs for colorful LED feedback.

The STEMMA QT port means you can attach heat image sensors like the Panasonic Grid-EYE or MLX90640 . Heat-Sensitive cameras can be used as a person detector, even in the dark! An external accelerometer can be attached for gesture or vibration sensing such as machinery/industrial predictive maintenance projects.

Lobe GitHub repo: https://github.com/lobe/lobe-adafruit-kit